NLTK

Last updated

Jun 17, 2023

https://www.nltk.org/book/

# Introduction

natural language toolkit, python 库

# help

1

2

|

nltk.help.upenn_tagset('DT')

nltk.help.brown_tagset('DT')

|

根据输入的语法规则, 分析给定句子的结构

syntax analyse 的任务主要是两种

- Strukturkennung 结构识别: 句子是否符合语法/是否有一种符合规则的推导

- Strukturzuweisung 结构分配: 重现找到的语法推导

# Grammar types in NLTK

lmu - Syntax of natural language(Germany)

sent = [‘I’, ‘shot’, ‘an’, ’elephant’, ‘in’, ‘my’, ‘pajamas’]

1

|

sent = "I shot an elephant in my pajamas.".split()

|

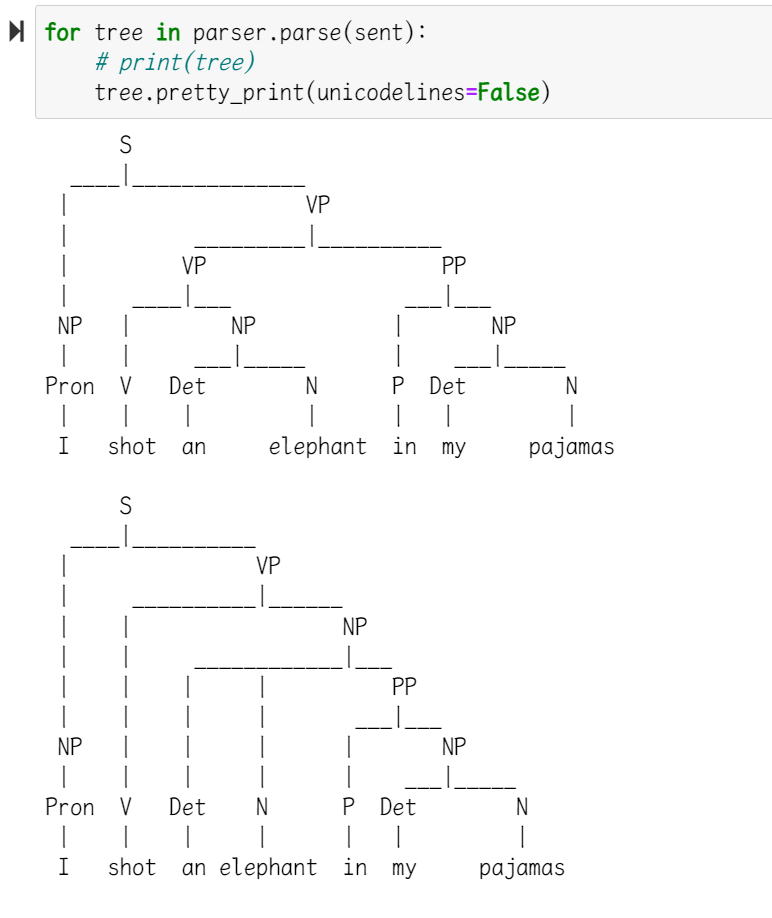

# 用 CFG 生成句子

- nltk.CFG.fromstring

- nltk.ChartParser/nltk.RecursiveDescentParser

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

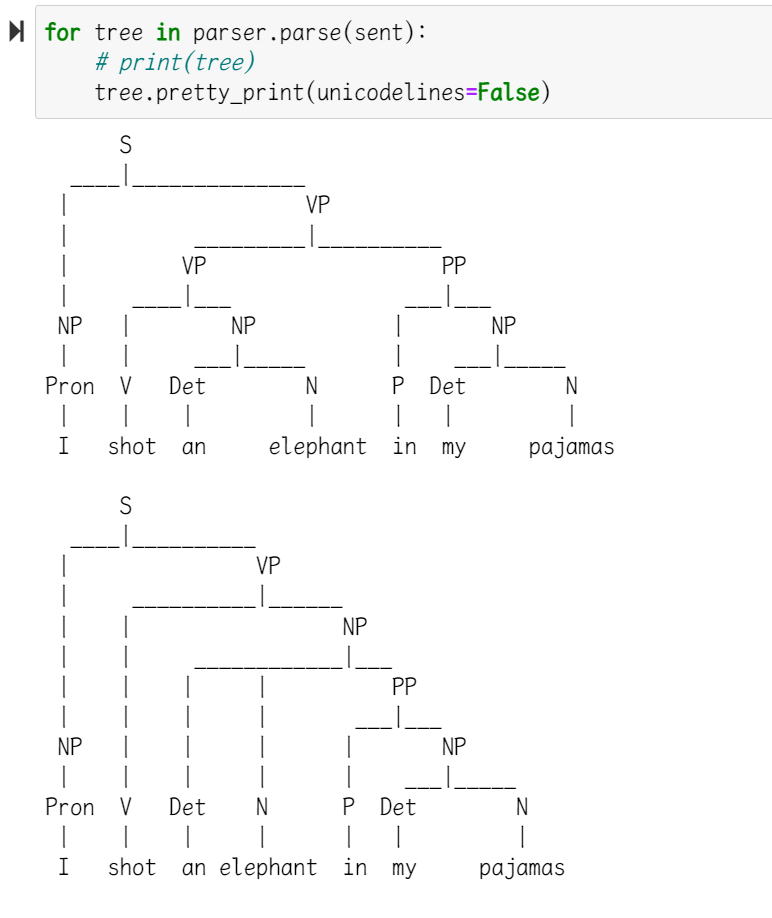

# 语法输入

grammar = nltk.CFG.fromstring("""

S -> NP VP

PP -> P NP

NP -> Det N | Det N PP | Pron

VP -> V NP | VP PP

Pron -> 'I'

Det -> 'an' | 'my'

N -> 'elephant' | 'pajamas'

V -> 'shot'

P -> 'in'

""")

# 根据语法创建解析器

parser = nltk.ChartParser(grammar) # ops: trace=3

# 可视化结果

for tree in parser.parse(sent):

tree.pretty_print(unicodelines=False)

|

PCFG

- nltk.PCFG.fromstring

- nltk.ViterbiParser

FCFG

- 基于 RegExp

- grammar = string

- nltk.grammar.FeatureGrammar.fromstring

- nltk.parse.FeatureChartParser

详参 01-vorlesung.ipynb

# 给定语法规则生成不同句子

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

grammar = nltk.CFG.fromstring("""

S -> NP VP

VP -> 'V' | 'V' NP | 'V' NP NP

NP -> 'DET' 'N' | 'N'

""")

from nltk.parse.generate import generate

# depth=3: 使用三次规则

for sentence in generate(grammar, depth=3):

print(' '.join(sentence))

# out:

# DET N V

# N V

# 有多少种?

print(len(list(generate(grammar, depth=3)))

# out:

# 2

|

# grammar.productions()

1

2

3

4

5

6

7

8

9

|

grammar = nltk.CFG.fromstring("""

S -> NP VP

VP -> 'V' | 'V' NP | 'V' NP NP

NP -> 'DET' 'N' | 'N'

""")

# 会把规则一条一条打印出来

for p in grammar.productions():

print(p)

|

out:

1

2

3

4

5

6

|

S -> NP VP

VP -> 'V'

VP -> 'V' NP

VP -> 'V' NP NP

NP -> 'DET' 'N'

NP -> 'N'

|

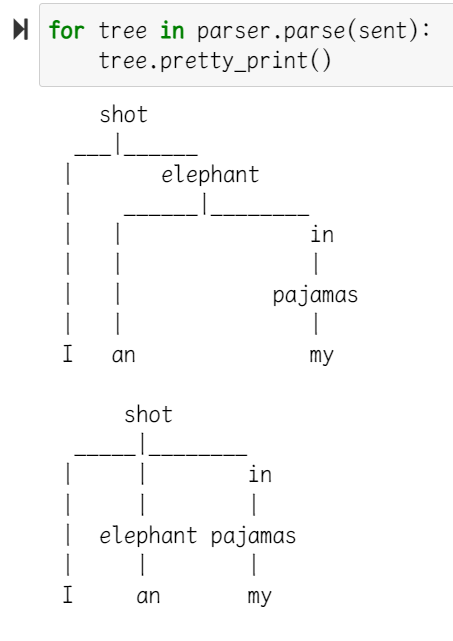

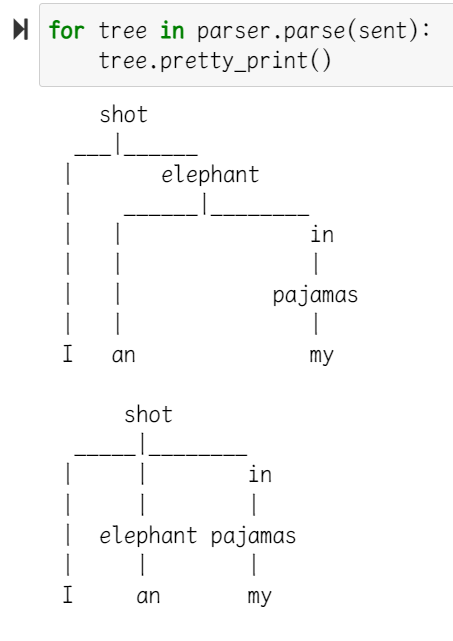

# 用 Dependcy Grammar 生成句子

- nltk.DependencyGrammar.fromstring

- nltk.ProjectiveDependencyParser

1

2

3

4

5

6

7

8

9

10

|

#Dependency Grammar (unlabeled):

grammar = nltk.DependencyGrammar.fromstring("""

'shot' -> 'I' | 'elephant' | 'in'

'elephant' -> 'an' | 'in'

'in' -> 'pajamas'

'pajamas' -> 'my'

""")

parser = nltk.ProjectiveDependencyParser(grammar)

for tree in parser.parse(sent):

tree.pretty_print()

|

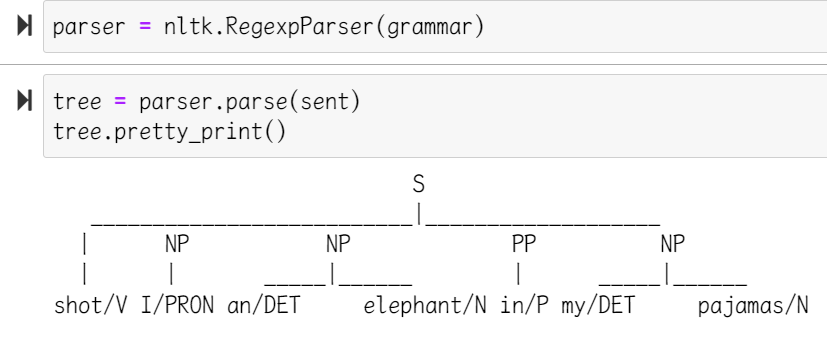

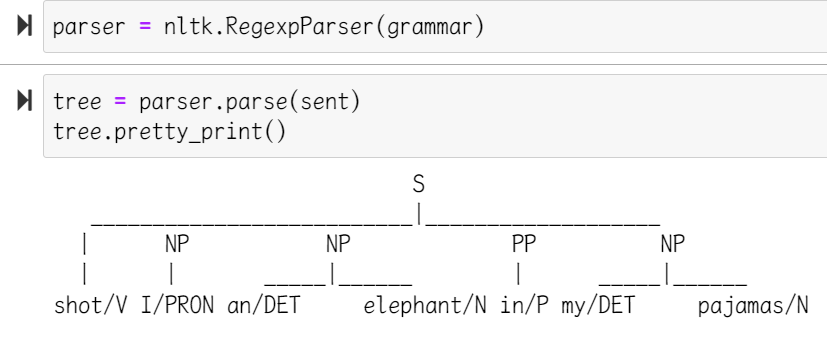

# 用 Chunk Parser 生成句子

- regexp

- nltk.RegexpParser(grammar)

1

2

3

4

5

6

7

8

9

10

11

12

|

# partielle, flache RegExp-Grammatik:

grammar = r"""

NP: {<DET>?<ADJ>*<N>}

{<PRON>}

PP: {<P>}

"""

sent = [("I", "PRON"), ("shot", "V"), ("an", "DET"), ("elephant", "N"),

("in", "P"), ("my", "DET"), ("pajamas", "N")]

parser = nltk.RegexpParser(grammar)

tree = parser.parse(sent)

tree.pretty_print()

|

# Constituent Tests

# 用 CFG 进行删除测试

lmu - Syntax of natural language(Germany)

- 给定原句的 syntaktische 和 lexicalische 规则

- 确保原句可以按 这里这样生成树.

- 输入的句子用删除后的句

详参

vorlesung-notebook/04-vorlesung.ipynb

# 用 Feature-based语法生成句子

- nltk.grammar.FeatureGrammar.fromstring()

- nltk.parse.FeatureChartParser()

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

# tracing = 0/2

grammar = nltk.grammar.FeatureGrammar.fromstring(gramstring)

parser = nltk.parse.FeatureChartParser(grammar,trace=tracing)

## POS Tagging

#### 使用指定 tagset 标记指定 text

```python

import nltk

text = "We want to tag the words in this text example."

tokens = nltk.word_tokenize(text)

tags1 = nltk.pos_tag(tokens) # Penn?

tags2 = nltk.pos_tag(tokens, tagset="universal")

print(tags1)

print(tags2)

|

1

2

3

|

out:

[('We', 'PRP'), ('want', 'VBP'), ...]

[('We', 'PRON'), ('want', 'VERB'), ...]

|

# Distribution Analyse

常用来确认词的类别

1

2

3

|

# 加载 Text

from nltk.corpus import brown

text = nltk.Text(word.lower() for word in nltk.corpus.brown.words())

|

# similar()

1

2

|

# text.similar(w) 找出与 w 上下文相同的词

text.similar('woman')

|

# 读取 corpus 中自带的 tag

1

2

|

from nltk.corpus import brown

brown_tagged = brown.tagged_words(categories='news', tagset='universal')

|

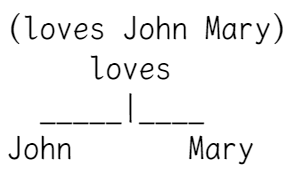

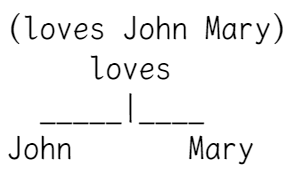

# 句子结构分析

# Dependency Graph

1

2

3

4

5

6

7

8

9

10

|

sent = """John N 2

loves V 0

Mary N 2

"""

dg = DependencyGraph(sent)

tree = dg.tree()

print(tree)

tree.pretty_print(unicodelines=False)

|

IPython.display(), 需要 pip install svgling 包

1

2

|

from IPython.display import display

display(dg)

|

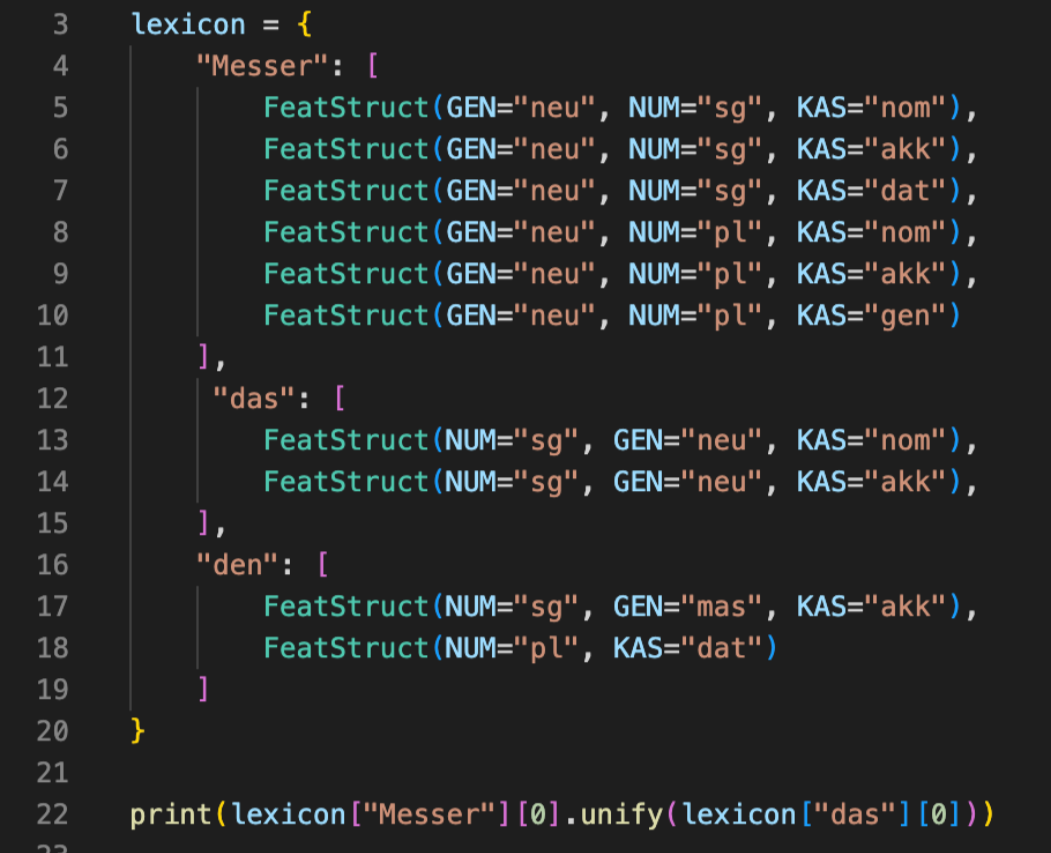

# Feature-based 结构

# 创建 FeatStruct

第一种

1

2

3

4

5

|

import nltk

from nltk import Tree

from nltk import FeatStruct

fs1 = FeatStruct(number='singular', person=3, )

print(fs1)

|

out:

1

2

|

[ number = 'singular' ]

[ person = 3 ]

|

1

2

|

fs2 = FeatStruct(type='NP', agr=fs1)

print(fs2)

|

out:

1

2

3

4

|

[ agr = [ number = 'singular' ] ]

[ [ person = 3 ] ]

[ ]

[ type = 'NP' ]

|

第二种

1

2

3

4

|

# folgen

FeatStruct("[CAT=V, LEMMA=folgen,"+

"SYN=[SBJ=?x, OBJ=?y],"+

"SEM=[AGT=?x, PAT=?y]]")

|

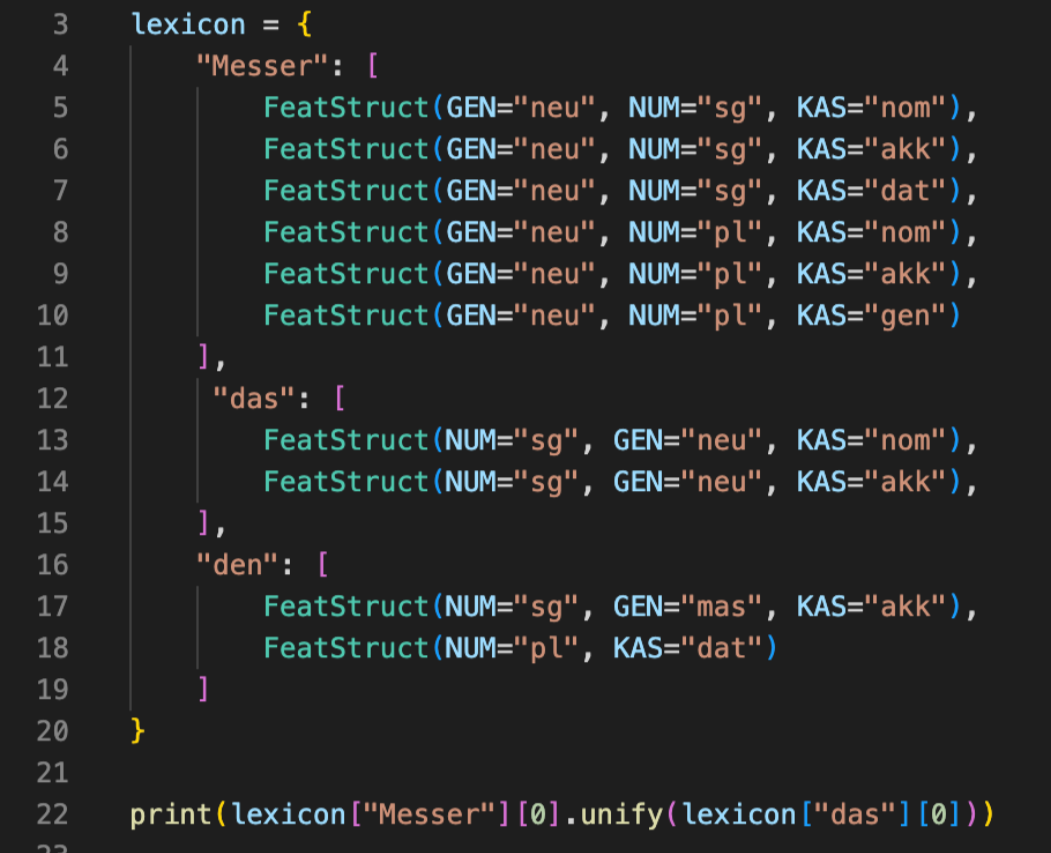

# Unifikation

- 返回将两个 Feat Struct 统一成的结构, 或者 None

- 只有在两个结构(的内容)不冲突时才能成功

上接

1

2

|

fs3 = FeatStruct(agr=FeatStruct(number=Variable('?n')), subj=FeatStruct(number=Variable('?n'))) # 变量n

print(fs3)

|

out:

1

2

3

|

[ agr = [ number = ?n ] ]

[ ]

[ subj = [ number = ?n ] ]

|

out:

1

2

3

4

5

6

|

[ agr = [ number = 'singular' ] ]

[ [ person = 3 ] ]

[ ]

[ subj = [ number = 'singular' ] ]

[ ]

[ type = 'NP' ]

|

# 创建 Feature 语法

1

2

3

4

5

6

7

8

9

10

11

12

13

|

#Feature-Grammar NP-Agreement:

gramstring = r"""

% start NP

NP[AGR=?x] -> DET[AGR=?x] N[AGR=?x]

N[AGR=[NUM=sg, GEN=mask]] -> "Hund"

N[AGR=[NUM=sg, GEN=fem]] -> "Katze"

DET[AGR=[NUM=sg, GEN=mask, CASE=nom]] -> "der"

DET[AGR=[NUM=sg, GEN=mask, CASE=akk]] -> "den"

DET[AGR=[NUM=sg, GEN=fem]] -> "die"

"""

|

然后就可以用这个语法分析句子了, notebook/06-vorlesung 中有例子

out:

out:

1

2

3

|

[ GEN = 'neu' ]

[ NUM = 'sg' ]

[ KAS = 'nom' ]

|

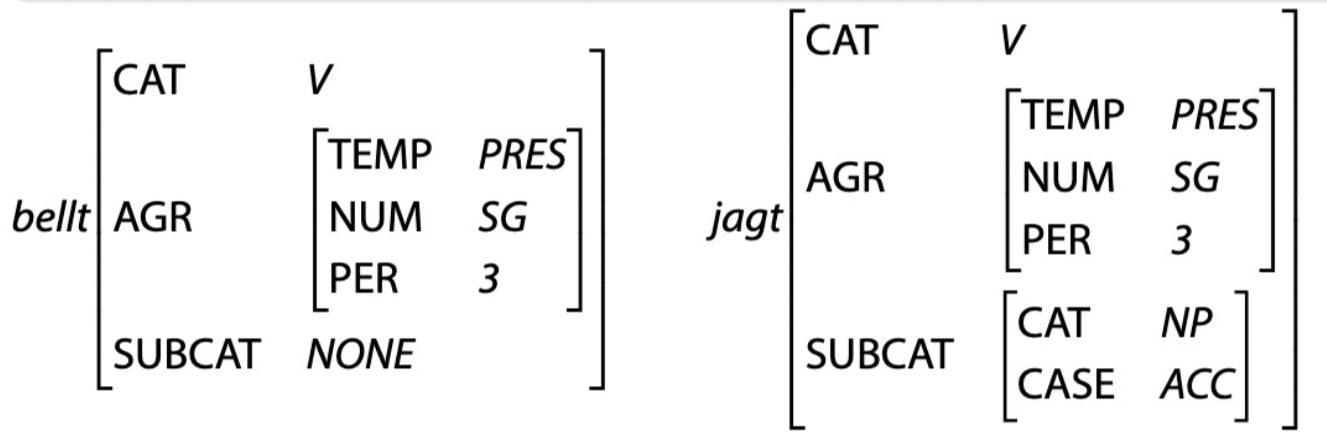

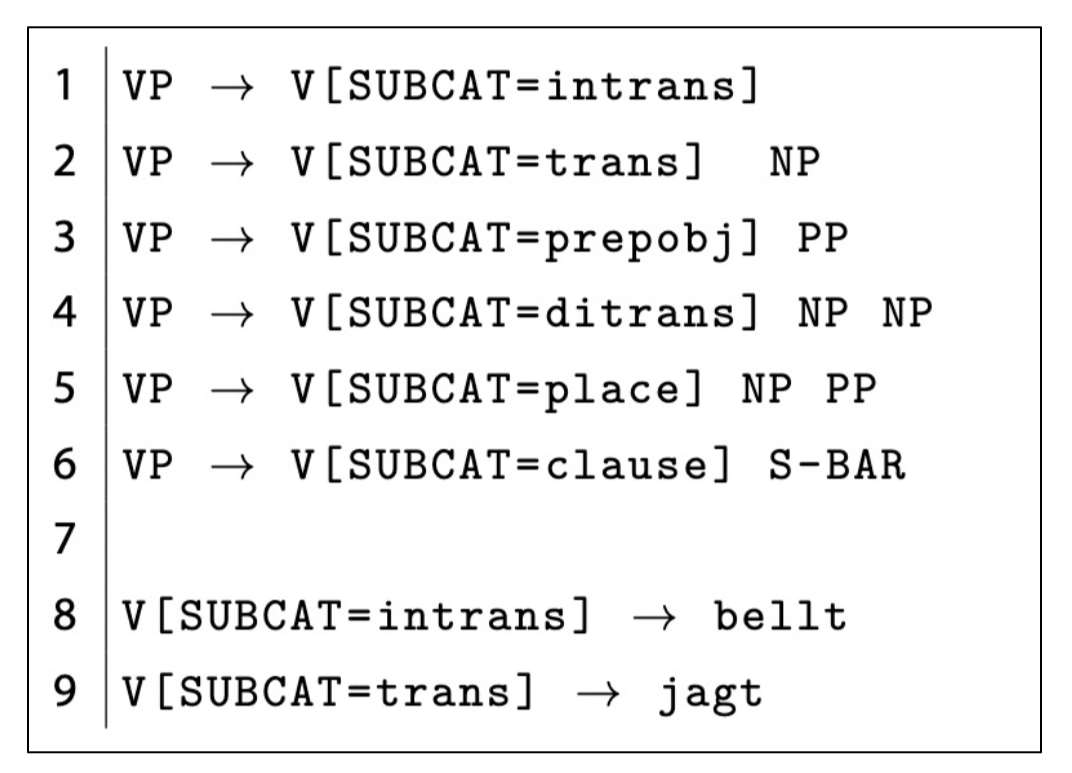

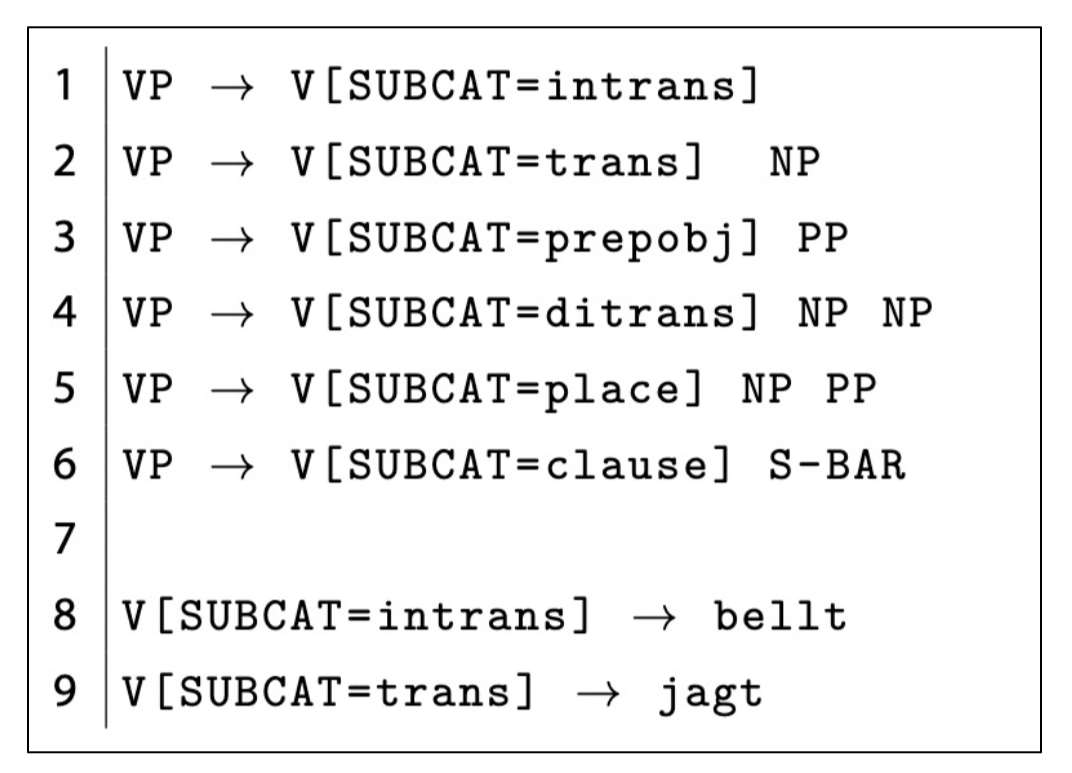

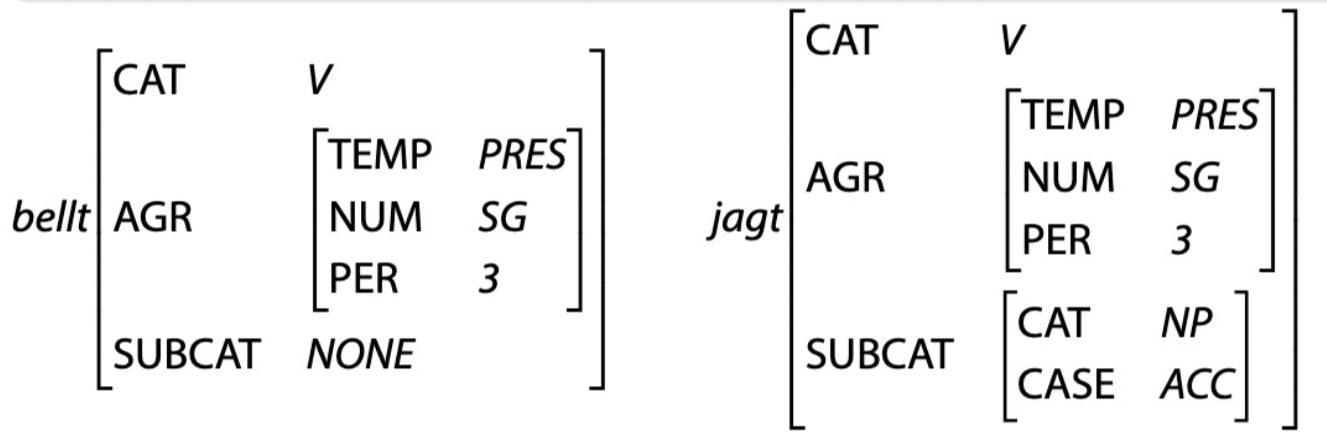

# GPSG

- Generalized Phrase Structure Grammar

- 在 中, 标注 V 的子类型

# HPSG

- Head-driven Phrase Structure Grammar

- 给动词 Feature-based 框架里标注上相关信息

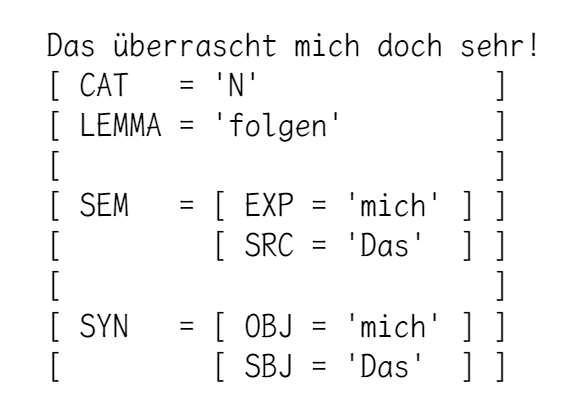

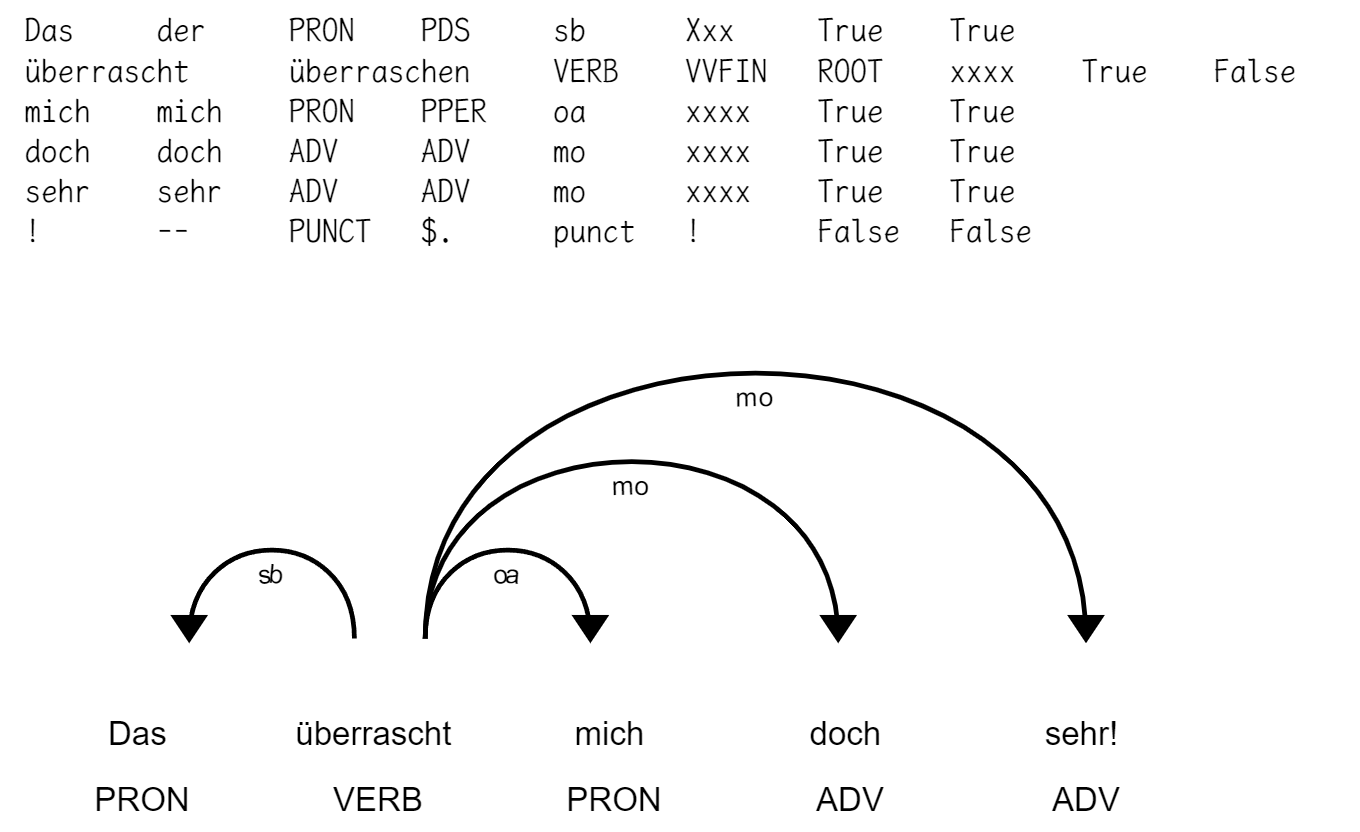

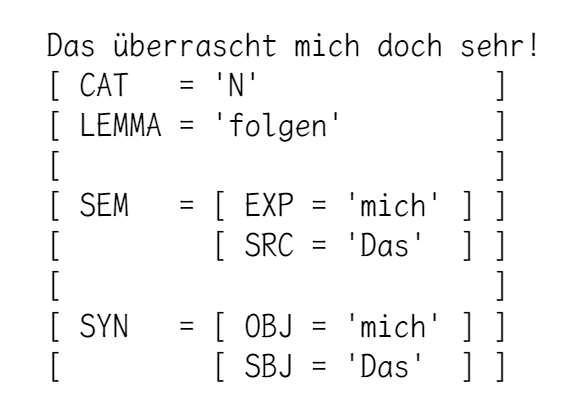

# 如何得到句子的的动词 FeatStruct

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

import spacy

nlp = spacy.load('de_core_news_sm')

# In: sentence as a string

# Out: semantic feature structure

def semantic_parse(sentence):

sbj, obj, verb = None, None, None

analyzed = nlp(sentence)

for token in analyzed:

if token.dep_ == 'sb':

sbj = token.text

elif token.dep_ == 'oa' or token.dep_ == 'da':

obj = token.text

elif token.pos_ == 'VERB':

verb = token.lemma_

if sbj is None or obj is None or verb is None:

raise RuntimeError('I could not identify all relevant parts: {} - {} - {}'.format(sbj, verb, obj))

return lexicon[verb].unify(

FeatStruct(SYN=FeatStruct(SBJ=sbj, OBJ=obj))

)

|

1

2

3

4

5

|

for sent in sentences:

fs = semantic_parse(sent)

print()

print(sent)

print(fs)

|

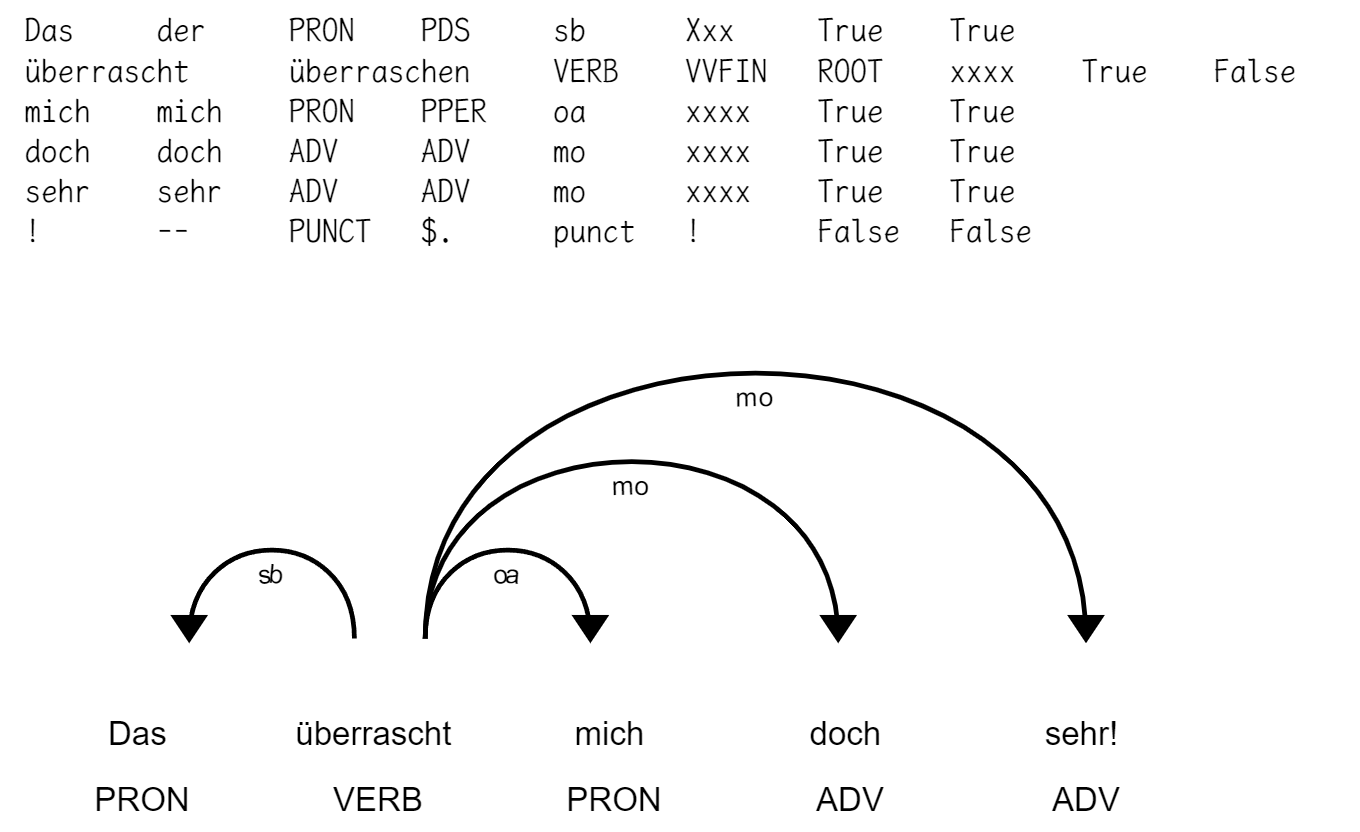

1

2

3

4

5

6

7

8

|

from spacy import displacy

for sentence in sentences:

sent = nlp(sentence)

for token in sent:

print(token.text, token.lemma_, token.pos_, token.tag_, token.dep_,

token.shape_, token.is_alpha, token.is_stop, sep='\t')

displacy.render(sent, style='dep', options={'distance':100})

|

# Subsumption

- f1.subsumes(f2), 返回 True/False

- f1 的内容 f2 也有

# Parsers

PCFG(u12)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

# 根据 treebank 中的 parsed_sents() 为输入, 推测 带权重的语法规则

# Production count: the number of times a given production occurs

pcount = defaultdict(int)

# LHS-count: counts the number of times a given lhs occurs

lcount = defaultdict(int)

for tree in treebank.parsed_sents():

for p in tree.productions():

pcount[p] += 1

lcount[p.lhs()] += 1

productions = [

ProbabilisticProduction(

p.lhs(), p.rhs(),

prob = pcount[p] / lcount[p.lhs()] # TODO

)

for p in pcount

]

# test

start = nltk.Nonterminal('S')

grammar = PCFG(start, productions)

parser = nltk.ViterbiParser(grammar)

for s in test_sentences:

for t in parser.parse(nltk.word_tokenize(s)):

print(t.prob())

t.pretty_print(unicodelines=False)

|

out:

out: